Here we are already in the second week of February. I do wonder how many New Year Resolutions have survived the return to work?

This year I didn't bother with any. I did in November though, resolve to read more technical books, and to particularly focus on the 'classics'. My motivation was stirred by Panagiotis Louridas's essay 'Rereading the Classics' from the book Beautiful Architecture. In it, Louridas examines the structure of Smalltalk and tries to reason why it achieved greater lasting success blazing a trail for others to follow than as a practical working programming environment.

Louridas suggests that Smalltalk is a classic and by way of justifying why, he quotes Italo Calvino's Why Read the Classics (1986)

The classics [...] exert a peculiar influence, both when they refuse to be eradicated from the mind and when they conceal themselves in the folds of memory, camouflaging themselves as the collective or individual unconscious.

A classic does not necessarily teach us anything we did not know before. In a classic we sometimes discover something we have always known (or thought we knew), but without knowing that this author said it first, or at least is associated with it in a special way. And this, too, is a surprise that gives much pleasure, such as we always gain from the discovery of an origin, a relationship, an affinity.

The classics are books which, upon reading, we find even fresher, more unexpected, and more marvelous than we had thought from hearing about them.

A classic is a book that comes before other classics; but anyone who has read the others first, and then reads this one, instantly recognizes its place in the family tree.

Smalltalk is a language that most of us have heard about but have rarely seen, and after being introduced to it I was inspired to start digging up any further literature I could find. The more that I read, the more that I appreciated its cleverness. Smalltalk can be eerily familiar, and as you begin to grok its syntax it is easy to recognise aspects that have inspired certain features of our 'modern' OO languages.

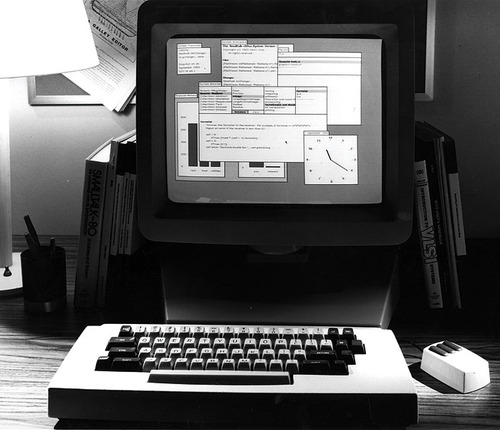

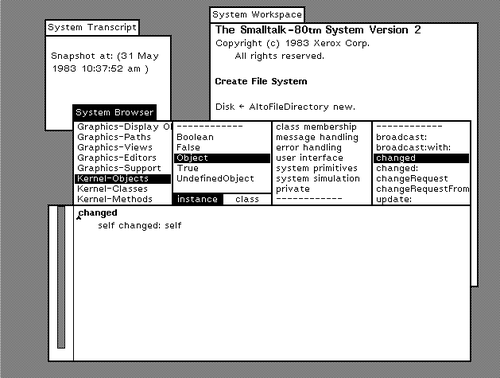

It is striking that the language itself (and indeed much of the material written about it) is so old. The most modern version of Smalltalk is Smalltalk-80 and although there are modern implementations, the overwhelming bulk of the syntax and environment still cohere to the 1980 standard. Yet download Pharo, read Alan Kay's The Early History of Smalltalk (1993), or Dan Ingalls's Design Principles behind Smalltalk (1981) and it all still seems contemporary. The language itself, which was controversial at the time for eschewing ALGOL syntax, has aged very well. Ruby has maybe made them fashionable, but Smalltalk sported things like block closures over a quarter of a century ago. Reflection, Metaprogramming and dynamic typing - all in Smalltalk in the 70s. Even the idea of using virtual machines to host the programming and operational environment seems remarkably contemporary today, as we increasingly move to abstract our programs and programming environments from bare metal.

It is humbling to see so many ideas that we take for granted today already implemented on a platform that is over a quarter of a century old. It is like amazing at how the Egyptians built the pyramids. Sure we could do it now, no sweat. But we have so much more raw engineering knowledge to throw at the problem. Alan Kay, Dan Ingalls, Adele Goldberg and the rest of the team at the Xerox PARC Learning Research Group designed and implemented Smalltalk with less computing power than my fridge has today. Despite formalising most of the vocabulary for OO software development during the development of Smalltalk it's hard not to feel like some of the energy and innovation of Kay's thinking didn't survive the C++ and Java succession.

Our discipline is still quite young when compared to traditional engineering or the sciences. Yet we seem to keep facing the same problems over and over again. The only difference is perhaps a few orders of abstraction, bigger piles of data and slightly more exotic technologies. But when I think that the fundamental concept of 'Agile' or at least 'iterative' development was doing the rounds in the 60s, it made me wonder what other insights are out there, buried in the forgotten past.

Maybe one reason we tend to forget what others have learned is that the average developer only reads one technical book per year. That means a sizeable percentage of software professionals do not actually read any! I think if I average over my professional working career (after graduating at the end of 2004) I would probably be batting one per year too. If I were to look over the last three years, maybe two per year. I wonder how many mistakes I might have avoided in that period had I read Fred Brooks's Mythical Man Month in 2004 rather than 2012.

My excuse has always been a lack of quality time. Last year in July I left my fulltime job for the world of consulting/freelancing. I imagined it would be easier to read more. In practice it has been, but not as easy as I thought or hoped.

I have a young daughter, my partner has returned to work and despite working from home, I still put in 50-60 hour weeks. It doesn't leave a lot of spare time and what spare time there is, is usually late at night when it's hard to concentrate.

So since I made a determined effort to read more, I've read four books cover to cover, and cherry picked bits out of another four. It feels good and the trick I've found is to read a chapter at a time, whenever you can. Whether it's just before dinner, over coffee/lunch, waiting at the supermarket or just before bed. I found that by reading, even if was just a little bit, every day, I was starting to get through entire books.

It requires conscious effort though, and some material is more suited to this style of reading than others. After a long day just before bed it's pointless trying to delve into SICP. It helps to read certain books during the work day, for example I've been re-reading GOOS and going through Kent Beck's TDD by Example over lunch times. I actively practice TDD so reading a chapter from one of these books midday helps me relate it directly to what I'm working on when I go back into the office.

I have a huge reading list setup in Google Reader, and I'm starting to think it's distracting. I have a slight OCD in that I need to keep the unread count at zero. At the end of a thirty minute work sprint, I would take five minutes to quickly flick through the list. It is distracting actually, and most of the content is superficial. The benefit of reading a book over a blog is the tendancy for a book to have its ideas more fully formed and logically structured. I realise the irony saying this while writing a blog myself. I feel that blogs have their place, but I have been spending far more time reading blogs than reading books. I am now leaving my Google Reader list unread longer and after a few 30 minute sprints picking up a book instead.

As I wrote above, Louridas inspired me to learn more about Smalltalk, and any research into the language leads to Alan Kay's ACM paper The Early History of Smalltalk. The paper beyond providing a wonderful insight into the language and the Xerox PARC is the source of some great quotes. One of my favourites goes:

Where Newton said he saw further by standing on the shoulders of giants, computer scientists all too often stand on each other’s toes.

In Software Engineering, we are so busy looking forward that we don't look back often enough. There is such a rich wealth of knowledge out there already considered and documented. I think we all should make more of an effort to re-discover it.