The big idea is messaging. Kay98. It's a quote cited early on in Growing Object Oriented Software Guided by Tests (GOOS), a book that looks at Test Driven Development (TDD) using Mock Objects. This idea of messaging being central to Object Oriented Analysis and Design (OOAD), drives much of what is presented throughout the book.

I think as OOAD has matured over the past decade, the mode of thinking of classes as hierarchical constructs has lost favour. Increasingly OOAD is about managing the collaboration of a large number of small, independent, objects. In such designs the solution to the problem is achieved by the way the developer defines the software's object graph – i.e. its composition. In a design that is focussed on getting the composition of objects just so, communication between them is the important thing, much more so than classification.

In GOOS, TDD is presented as an exercise to first understand and then improve messaging protocols between objects. GOOS demonstrates that Mock Objects are an ideal tool to help discover these protocols . This is unusual, even in 2012, for a lot of developers. Typically I've always used Mock Objects as a Test Stub or Double. A placeholder object to induce some specific behaviour I want to test, or to isolate the unit of code I'm testing. GOOS sees Mocks a different way, as a means of representing roles within a system. The authors say a Mock Object is not a Stub, but instead an interface to some behaviour or role. Mock Roles not Objects, a paper written by Freeman, Pryce, et al. way back in 2004, explains this concept and sets out much of groundwork for GOOS.

GOOS itself is structured into five sections. The first couple of sections (very) briefly introduce the reader to TDD, the basic tenets of Agile development (very heavily influenced by Extreme Programming, XP), testing tools (JUnit, JMock, Hamcrest, Windowlicker) and the authors' OOAD philosophy.

The overwhelming impression I get from Freeman and Pryce's introduction to TDD and OOAD is that they see writing tests as less an exercise in producing a regression catching suite, and more as a design exercise. By writing a failing test up front, you have an immediate, testable, statement of intent as to what the software will do, and just as importantly, what it wont do. Focussing on just a narrow slice of behaviour, as represented by a single test, helps narrow the scope of what needs to be done. The act of satisfying the test, making it pass, focuses the developer's mind on the domain of the problem and forces both the developer and the customer to think of what from the environment impacts the test. Flushing out dependencies, object peers and services early on is a good thing.

Too often in Big Design up Front approaches, you write a ton of code according to a pre-determined (but unchecked) idea of the environment. When you go to hook everything up at the end you can find that, to the horror of your project sponsor (and spouse who wont see you for at least the next week), that, actually nothing hooks up. Or worse, you have misinterpreted what the Sponsor wanted. In a Test Driven Design, if elements of the system are incompatible, you're alerted to it early. If you have gotten the requirements completely wrong the customer can see it straight away. TDD takes 'Fail Fast' to its logical extreme.

The meat of the book is a worked example. The authors describe a fictional auction sniper system that connects to an auction, makes bids on items and either wins or loses. It's a simple example, but complicated enough to run into common issues developers face when developing OO software. Certainly there is little trouble filling out 150 pages as the authors work from an abstract set of stories to concrete code. What is good about their example is the way you see the code transforming in stages as extra features are added. The writers are careful to explain the motivations for the transformations they make and they tie it back to the TDD and OOAD principles they introduce in the first two sections.

Having worked through the Auction Sniper application using Java, JUnit, JMock, Hamcrest and Windowlicker there's a brief recap and the book moves swiftly onto it's fourth and fifth sections.

The fourth section covers 'sustainable TDD', an important and increasingly relevant topic for many developers. The burden of maintaining poorly designed test suites is a drag on developer productivity. Rather than liberating developers to improve the structure of their code, bloated indecipherable test suites become a handbrake. GOOS goes through techniques to keep test suites effective, flexible and importantly - expressive. The concept, that software is about communication, is emphasised across the book. Tests are no different. Tests should express the developers intent and function as the rough and ready documentation of a unit of code. I found the advice around constructing data builders - techniques for creating test data for use in your test cases - particularly valuable.

The final section tackles the really hard stuff, dealing with persistence (and by extension any sort of frameworky data service), asynchrony and lastly concurrency.

I found GOOS easy to read and its chapters are of a length that can be easily read on the train/bus or in short bursts. Physically, the paper is of high quality and the typesetting clear, and easy to read. I really like the images and diagrams which are simple, authentic and aid what is being discussed. I felt like the introductory sections to TDD and particularly the author's OO philosophies were fairly succinct, perhaps too succinct. But as they state from the outset, this is not a book on TDD. And anyway, Meszaros07 should be enough TDD for any human being.

GOOS comes with a particularly outstanding bibliography. Freeman and Pryce's academic backgrounds and broad reading are on display with the depth and quality of their references. As a starting point GOOS, while being a pretty domain specific (Mock Object) text, serves as a wonderful launching pad to further OOAD and TDD reading.

I like that the authors are people who practice what they preach. GOOS is a book for people who write real code in the real world. Sadly there seems too many authors, 'consultants' and coaches these days that talk a lot about programming and programming techniques yet seldom practice it in the wild. GOOS reads like a book borne out of brutal trench warfare with Objects. It is refreshing to read a book detailing a principled design philosophy and practice that has been tested in the dirty unwashed world of Enterprise

I've slowly been pruning back my physical book collection and trying to maintain a library of what I would consider 'classics'. The GOF book, Fowler's Refactoring and PoAEE Books, Beck's XP White Book, the Prag Prog Book, Kernighan and Richie's C Book, SICP and Knuth's The Art of Computer Programming series (and if I can ever get it, Mike Abrash's Black Book). I think, for me, this book fits into that category. It represents a decade of work and thinking, neatly explained by two highly skilled and above all practical developers who were at the heart of it all.

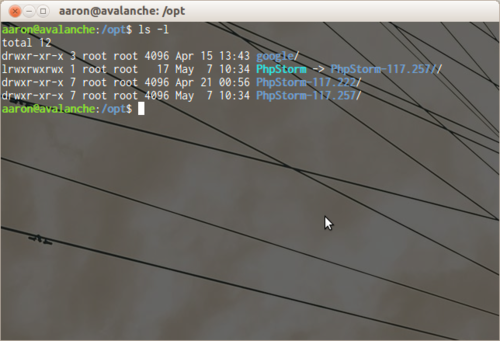

I highly recommend this book to anyone actively practising TDD and also generally to anyone with an interest in Object Oriented Software Design and Practice. While GOOS is a 'Java book' and day to day I program in PHP, the principles, practices and overarching philosophy easily translate.

Follow Steve Freeman @sf105 on Twitter, and read his blog http://www.higherorderlogic.com/. Nat Pryce is @natpryce and blogs at http://www.natpryce.com/.

Also be sure to check out the excellent mailing list http://groups.google.com/group/growing-object-oriented-software.