It's very simple right now to get the milestone builds of the PHP Developer Tools (PDT) 3 up and running (and a significant improvement on the current Helios SR2 release).

Pull down the 'classic' version Eclipse 3.7 Indigo from http://www.eclipse.org/downloads/ and install.

Once installed, launch Eclipse and navigate to Help->Install new Software.

Add the Indigo update site 'http://download.eclipse.org/releases/indigo'. This will take sometime to add, let it go for 5 or so minutes.

UPDATE 20/10/2011

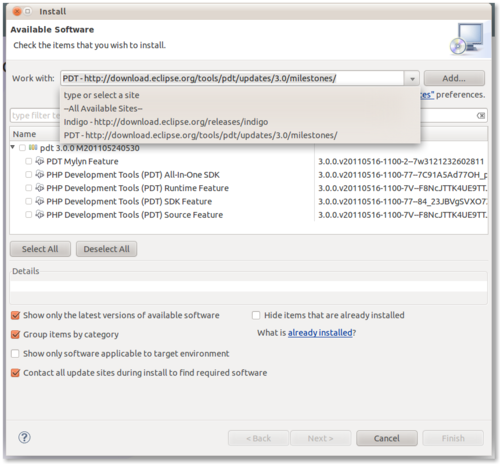

The following step is no longer required as the the PDT 3.0 series is now in the main indigo update repository. Once the Indigo Update Site is added, add the PDT 3.0 Update Site http://download.eclipse.org/tools/pdt/updates/3.0/milestones/

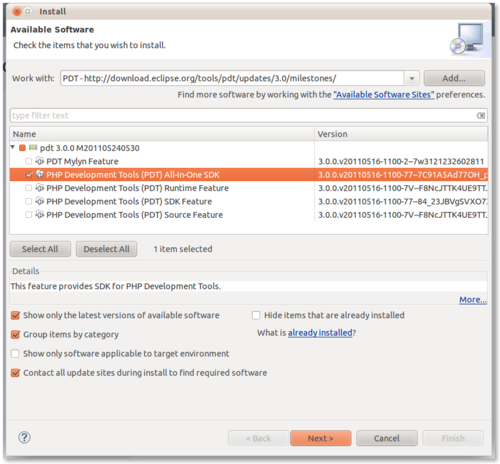

Now, to install simply select PDT Development Tools All in One SDK (leave the others unselected) and click next. The installation process shouldn't take more than a few minutes.

.

.